Recently, Apple has refused orders from the FBI, resulting in significant controversy. The FBI is attempting to access an iPhone owned by one of the San Bernardino assailants, but Apple says that breaking the phone’s security would set a dangerous precedent.

As we regularly deal with difficult security issues, we wanted to present both sides of the argument and provide an analysis of some of the technical claims present in the case.

What Is the FBI Asking of Apple?

The FBI is asking for help, but what exactly are they asking for?

Contrary to some reports, they are not asking for Apple to break the encryption on a particular phone, provide the encryption keys for it, or provide some backdoor entrance to the phone. Apple has stressed that they cannot directly help anyone get into a specific phone. They do not save encryption keys, and they cannot create a backdoor to individual units.

The FBI is asking for a customized version of iOS, the operating system used by the iPhone, iPad, and iPod Touch.

But before declaring that this is the same thing as asking for a backdoor, let’s back up and provide some information about Apple products and their encryption standards.

iPhone Encryption and Passcode Attempts

Recent iPhones and iOS versions have had a solid encryption that doesn’t appear to have any serious weak points.

Recent iPhones and iOS versions have had a solid encryption that doesn’t appear to have any serious weak points.

This is unlike the iPhone 4 and older, which aren’t hardware encrypted and can be cracked relatively easily. This has been problematic for law enforcement for some time.

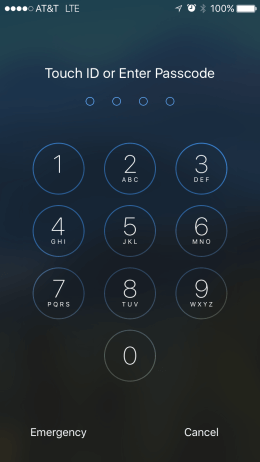

A user unlocks their phone with their fingerprint on the button or by entering a passcode, which by default is 6-digits but may be just 4-digits.

This trigger allows for access to other encryption keys stored in the phone’s hardware, and iOS uses those to allow for normal operation of the phone.

A 4-digit passcode is really short, as you might know if you’re interested in tech security. Many web forms with “password strength” indicators would automatically call a four digit code “weak.”

In fact, there are only 10 * 10 * 10 * 10 = 10,000 possibilities. A person could sit down and try them all over the period of perhaps just 14 hours (10,000 tries * 5 seconds/try / 60 seconds/minute / 60 minutes/hour). For a 6-digit passcode, this would take over 57 days.

They key protection here is that iOS has a limit for the maximum failed passcode attempts. After five incorrect attempts, it will delay your next attempt for 1 minute. After another failed attempt, you’ll have to wait 5 minutes. The timeout keeps increasing with 15 minute delays, then 60 minute delays.

Finally, after the 10th try, iOS will trigger a complete wipe of the phone. It doesn’t technically have to wipe the phone’s storage, but the result is identical. By resetting one of the required encryption keys, it permanently prevents decryption.

The FBI’s Request for A New iOS

That brings us back to the FBI’s request.

The FBI says that they’re asking for something reasonable, given current technologies in the worlds of encryption, customer privacy, and business interests. They’re asking for a customized version of iOS, digitally signed by Apple, that can be loaded into the phone’s random access memory (not the main storage) so that the custom iOS can control the phone.

This version would have the lockout and wipe feature disabled, so it will not erase the encryption key after 10 failed tries at the passcode and will not introduce delays.

The FBI also requests that this customized version of iOS include code requiring that it can only be run on an iPhone with the exact unique identifier of Syed Rizwan Farook’s iPhone. Once the operating system is functional, the FBI simply has to try various passcodes until they have the phone unlocked.

They could do this physically, which may take the aforementioned 14 hours, or if they can input codes electronically, they could input an attempt every second.

Some would argue that the FBI would then do the actual hacking, not Apple. To us, it still seems like a team effort.

Apple’s Security Concerns Regarding The FBI’s Request

That part about the unique identifier seemingly limits the effect of this request to only this one iPhone in FBI custody, but Apple has bigger things to worry about. Leaks are always a danger, so just having their employees work on this project may be a risk to Apple.

The possibility of a source code leak is nothing new. Apple’s code in iOS is proprietary, not open sourced like Android, so they protect it vigorously. However, if they complete this specialized new version of the system, they might inadvertently increase the chances for a leak of the original code.

There’s also a chance that the final compiled product may be leaked – and that’s likely a more significant risk, since more parties are involved. In this case, it may be possible (if difficult) for someone to find a way to modify the unique identifier. That would mean that the modified version of iOS may be used on any iPhone, and Apple’s products would be less secure as a result. The iPhone’s requirement of signed firmware may mitigate this (maybe the possible leak of Apple’s signing key is yet another risk). But it doesn’t at all help the next concern…

What Apple really doesn’t want is for a precedent to be set that they’ll help law enforcement crack into the phones of their customers. That is bad PR. It would likely occur over and over in the future. But also law enforcement will see this precedent, and then ask for slightly more technical help the next time. Apple would be required to argue their case again in hopes of drawing the line for what help they provide.

The FBI may win out, since this case involves terrorism and mass murder; in the court of public opinion, national security usually trumps personal privacy. The Bureau have purposely made a narrow, specialized request in order to avoid claims that this constitutes unreasonably burdensome technical assistance (we’re not lawyers, so we’ll leave that argument to the attorney blogs).

But if Apple does claim that this is too difficult, the FBI may then move to request the iOS source code so that they can make the modifications themselves. We don’t want to speculate on how that might go. The law enforcement community is watching extremely closely because there are plenty of other iPhones that authorities would love to be able to access.

Possible Future Changes to Squash Decryption Efforts

Looking to the future, Apple may choose to adjust their phone unlocking scheme to prevent such requests before they even occur. They made great strides in iOS 8 to strengthen the iPhone’s encryption and to prevent this exact type of situation with law enforcement. Additional changes might strengthen their case in future claims.

If the passcode was 10 digits, for instance, then a brute force attack could take thousands of years. That might be a little unreasonable for users, as the human mind can typically remember only seven random digits.

The fingerprint unlocking mechanism is probably much more difficult than a 4-digit passcode as well, since the fingerprint has many more points. That means more required digits (no pun intended). Apple could eliminate the passcode entirely and just use the fingerprint, although some users might not like this. Alternately, they could switch to a completely different unlocking mechanism.

In the end, it comes down to the age-old conflict of privacy vs. law enforcement. Without speculating or taking sides, we’ll say that this is an interesting case to say the least, and it’s certainly worthy of all of the mainstream attention.